Inspired by JD Pressman.

Inspired by JD Pressman.

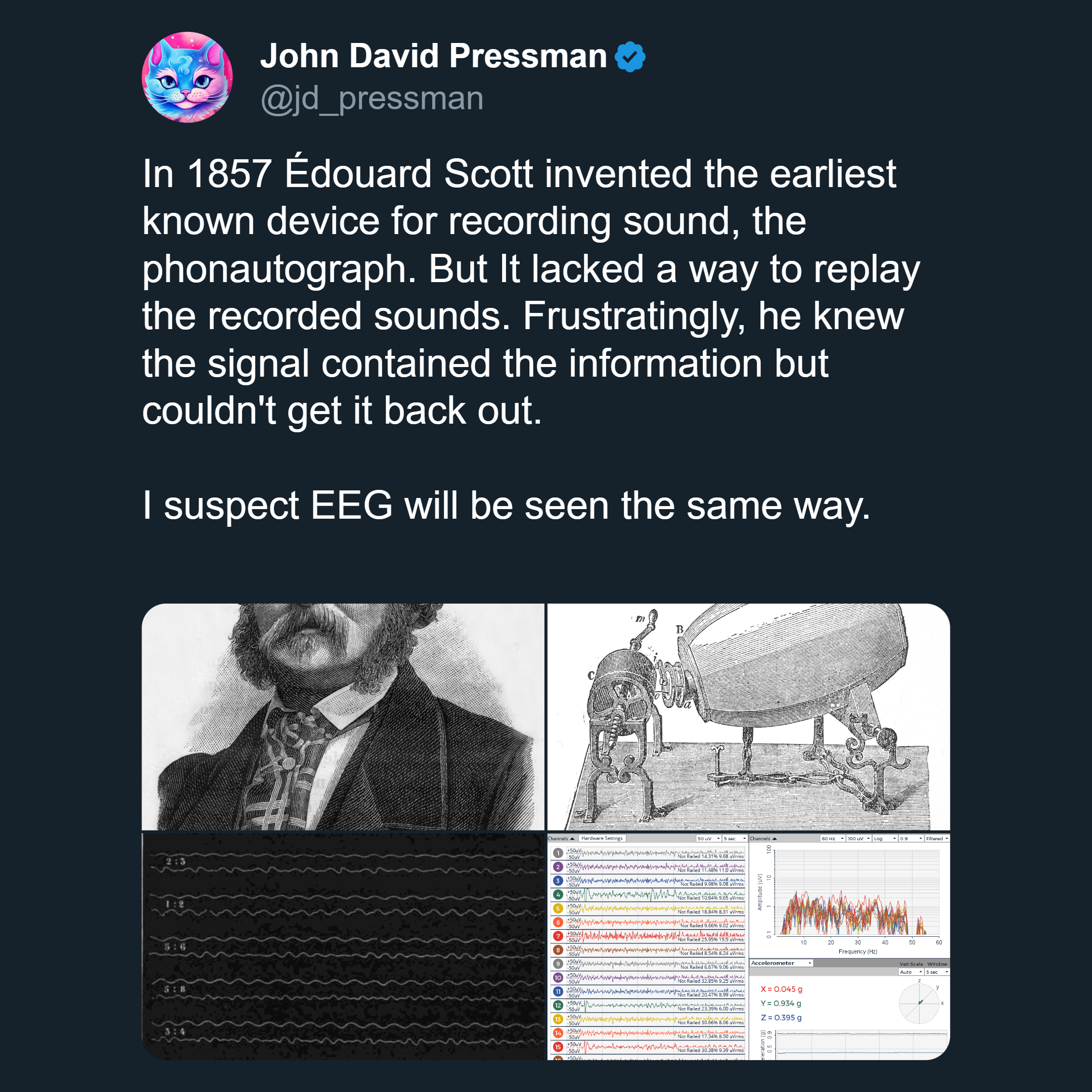

About a year ago, researchers were able to apply deep learning models to EEG data to decode imagined speech with 60% accuracy. They accomplished this with only 22 study participants, contributing 1000 datapoints each. Research in using deep learning with EEG is relatively early and not very powerful, but this paper presents an early version of what is essentially mind-reading technology. As scaling laws have taught us, when machine learning models express a capability in a buggy and unreliable manner, larger versions will express it strongly and reliably. So we can expect that the primary bottleneck for improving this technology—apart from some algorithmic considerations—is gathering data and compute at a large enough scale. That is, if we managed to get millions of datapoints on imagined speech, we would be able to easily build robust mind-reading technology.

But this sort of data/compute scaling has historically been difficult! Particularly, it's very hard to get clean, labeled data at scale, unless there's some preexisting repository of such data that you can lever. Instead, the best successes in deep learning—like language and image models—have come from finding vast pools of unlabeled data and building architectures that can leverage it.

So, how could we apply this to EEG? I suspect that we'll want to get data that's minimally labeled, and maximally easy to harvest. Like with language models, I think we should train a machine learning for autoregression on unlabeled EEG data. We would collect EEG data from a large sample of individuals going about their daily lives, and train a model to predict new samples from previous samples. We'd probably want to use a different architecture than language transformers—at minimum training on latent z rather than direct EEG samples—but deep learning research is unlikely to be the bottleneck. [1]

How much data would we need? Let's say we wanted to create a model on the scale of Llama-70b. Powerful enough to be humanlike, coherent, and often smart. Llama-70b needed 2 trillion tokens. We could probably wring out a bit more performance per token by increasing model size, decreasing our necessary tokens to 1.5T. We could also run the data for, say, 15 epochs, reducing our token need to 120 billion. If it's natural to divide thought into 3 tokens per second, then in total we'll need 84 million hours of EEG data. [2] We could probably decrease that a bit more with clever data efficiency tricks [3], but we'll still most likely need tens of millions of hours. This would involve—for example—collecting data from 10,000 people for 500 days. This is difficult, but it could be doable if you have a billion dollars to spend. If things continue as they are, an AGI lab could do it for a small portion of their total budget in a few years.

The result of such a training run would be a model that can autoregressively generate EEG data. Given how much we can already extract from EEG data, it's clear that there's a massive amount of information within it. It's quite possible, in fact, that much of the information constituting our conscious experience is held within EEG data. If so, a model that can generate EEG data would be essentially a general mind-uploader. The simulation might not encompass all experience, and it might be missing detail and coherence, but it would be a working mind upload. We could communicate with such simulated minds by translating from EEG input/output to downstream text or other channels. We could use the tools we've developed for neural networks to modify and merge human and machine minds. These capabilities make this form of mind uploading a compelling route to ascension for humanity. See Beren Millidge's BCIs and the ecosystem of modular minds:

If we can get BCI tech working, which seems feasible within a few decades, then it will actually be surprisingly straightforward to merge humans with DL systems. This will allow a lot of highly futuristic seeming abilities such as:

1.) The ability to directly interface with and communicate with DL systems with your mind. Integrate new modalities, enhance your sensory cortices with artificial trained ones. Interface with external sensors to incorporate them into your phenomenology. Instant internal language translation by adding language models trained on other languages.

2.) Matrix-like ability to ‘download’ packages of skills and knowledge into your brain. DL systems will increasingly already have this ability by default. There will exist finetunes and DL models which encode the knowledge for almost any skill which can just be directly integrated into your latent space.

3.) Direct ‘telepathic’ communication with both DL systems and other humans.

4.) Exact transfer of memories and phenomenological states between humans and DL systems

5.) Connecting animals to DL systems and to us; let’s us telepathically communicate with animals.

6.) Ultimately this will enable the creation of highly networked hive-mind systems/societies of combined DL and human brains.

Of course, in this sense, even language models (particularly base models) are something like mind-uploading for the collective unconscious. Insofar as text is the residue of thought, and thus predicting text involves predicting the mind of its author, even a model trained solely on text acts as a sort even-lower-fidelity mind upload. Few appreciate the consequences of this.

Footnotes

[1] Insofar as deep learning research is the bottleneck, we should focus on speech autoregression as a testing ground for approaches to EEG.

[2] This is a rather conservative estimate, in my view. 3-10 tokens/frames per second seems like the rough scale of human brain activity (see Madl et al). However, what might be far more important is information. 64 channels of EEG data—and all the conscious experience that encompasses—might contain far more information for training a model than a token of text. That is, we might expect models to learn quicker on EEG data because a single EEG token contains far more useful information than a single text token. It's for this same reason that multimodal text/image models spread the information contained in an image out over many tokens, rather than stuffing it in a single one.

[3] For instance, we could simultaneously train on some sort of real-time human behavior, e.g. speech, keystrokes, or screen recordings. (or even maybe web text data, though it's not real-time) All of these share massive amounts of information with EEG data: the best way to win at any sort of action prediction involves understanding the actor's mind. So some lessons from each modality might transfer to the other, reducing the amount of EEG data required.